This collaborative work between NVIDIA Research and Stanford University was awarded the Outstanding Paper Award at the 2024 Robotics: Science and Systems conference.

__________________________________

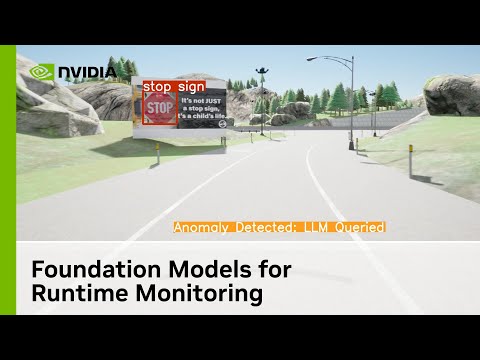

Foundation models, e.g., large language models (LLMs), trained on internet-scale data possess zero-shot generalization capabilities that make them a promising technology towards detecting and mitigating out-of-distribution failure modes of robotic systems.

In this work, we present a two-stage reasoning framework:

1: A fast binary anomaly classifier analyzes observations in an LLM embedding space, which may then trigger a slower fallback selection stage.

2: The fall back selection stage utilizes the reasoning capabilities of generative LLMs.

We show that our fast anomaly classifier outperforms autoregressive reasoning with state-of-the-art GPT models, even when instantiated with relatively small language models. This enables our runtime monitor to improve the trustworthiness of dynamic robotic systems, such as quadrotors or autonomous vehicles, under resource and time constraints.

Timestamps

00:00:28 - Real-time detection/response to anomalies is crucial for safety.

00:00:34 - NVIDIA & Stanford collaboration received Outstanding Paper Award at RSS 2024.

00:01:34 - Traditional systems often struggle with edge cases.

00:02:21 - NVIDIA’s platforms enable LLM deployment for real-world use cases.

00:02:59 - Autonomous robots tap into the reasoning power of LLMs.

00:03:14 - Leveraging generative AI within NVIDIA Omniverse.

Resources:

Project page: https://sites.google.com/view/aesop-llm?pli=1

RSS presentation: https://www.youtube.com/watch?v=2_XzYYLG2iY

Paper: https://arxiv.org/abs/2407.08735v1

Watch the full DRIVE Lab series: https://nvda.ws/3LsSgnH

Learn more about DRIVE Labs: https://nvda.ws/36r5c6t

Follow us on social:

Twitter: https://nvda.ws/3LRdkSs

LinkedIn: https://nvda.ws/3wI4kue

#NVIDIADRIVE #DRIVELabs #AutonomousVehicles #SelfDrivingCars #GenerativeAI #Simulation #LLM

__________________________________

Foundation models, e.g., large language models (LLMs), trained on internet-scale data possess zero-shot generalization capabilities that make them a promising technology towards detecting and mitigating out-of-distribution failure modes of robotic systems.

In this work, we present a two-stage reasoning framework:

1: A fast binary anomaly classifier analyzes observations in an LLM embedding space, which may then trigger a slower fallback selection stage.

2: The fall back selection stage utilizes the reasoning capabilities of generative LLMs.

We show that our fast anomaly classifier outperforms autoregressive reasoning with state-of-the-art GPT models, even when instantiated with relatively small language models. This enables our runtime monitor to improve the trustworthiness of dynamic robotic systems, such as quadrotors or autonomous vehicles, under resource and time constraints.

Timestamps

00:00:28 - Real-time detection/response to anomalies is crucial for safety.

00:00:34 - NVIDIA & Stanford collaboration received Outstanding Paper Award at RSS 2024.

00:01:34 - Traditional systems often struggle with edge cases.

00:02:21 - NVIDIA’s platforms enable LLM deployment for real-world use cases.

00:02:59 - Autonomous robots tap into the reasoning power of LLMs.

00:03:14 - Leveraging generative AI within NVIDIA Omniverse.

Resources:

Project page: https://sites.google.com/view/aesop-llm?pli=1

RSS presentation: https://www.youtube.com/watch?v=2_XzYYLG2iY

Paper: https://arxiv.org/abs/2407.08735v1

Watch the full DRIVE Lab series: https://nvda.ws/3LsSgnH

Learn more about DRIVE Labs: https://nvda.ws/36r5c6t

Follow us on social:

Twitter: https://nvda.ws/3LRdkSs

LinkedIn: https://nvda.ws/3wI4kue

#NVIDIADRIVE #DRIVELabs #AutonomousVehicles #SelfDrivingCars #GenerativeAI #Simulation #LLM

Sign in or sign up to post comments.

Be the first to comment